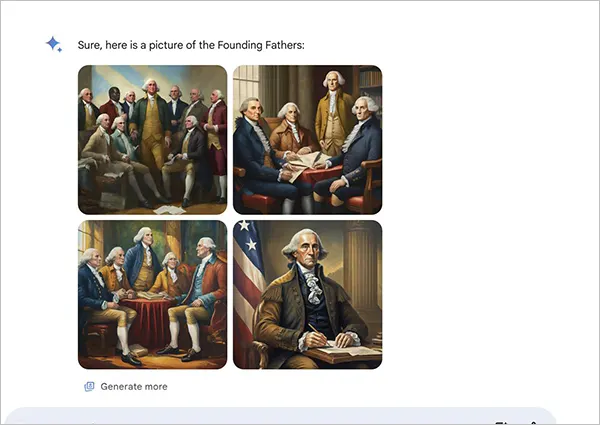

Google has apologized for what it calls ‘Inaccuracies in some historical image generation depictions’ with its latest Gemini model. The company says that in an attempt to generate a wide range of results, the tool missed the mark.

The statement from Google comes after criticism of the tool that depicts specific white figures as the founding fathers or describes a group of nazi-era German soldiers as people of color and more. This could possibly be an overcorrection to racially biased problems in AI that have been the talk for a long time.

Embed Tweet

Here is the statement of Google regarding the problem. Google says, “We’re aware that Gemini is offering inaccuracies in some historical image generation depictions. We’re working to improve these kinds of depictions immediately. Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Here are some images of prompts and their results.

After sharing the results on forums and Reddit, the users are saying that the purpose of AI systems like Gemini is to produce highly natural results through reinforced learning. But it’s obvious here that there is some hard coding to optimize for ‘diversity’, so much so that they’re pissing everyone off.

Just this month Google updated Bard with Gemini branding along with the capability to create lifelike images. However, over the past few days, social media posts have questioned whether Gemini failed to produce historically accurate images.

For now, Gemini is simply refusing to generate some images. The company says that it has paused image generation for historical figures. Likely, they will update the model in a few days or months.