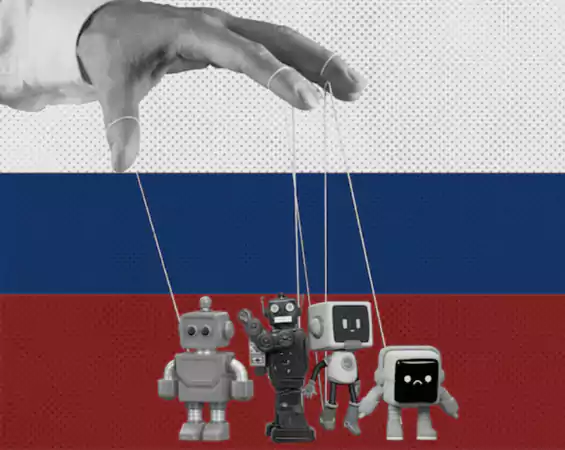

In recent investigations, leading AI chatbots spread Russian propaganda outlets when addressing questions about the war in Ukraine. Researchers discovered that 18-20 per cent of responses from widely used models cited media tied to the Russian state, including outlets previously subject to sanctions.

The issue springs from how large-language models are built. They ingest massive spans of internet-text, including regions where state-backed or pro-Kremlin narratives have been systematically inserted into what experts term ‘data voids’, topics for which reliable mainstream coverage is thin. Once such sources make their way into a model’s reference space, they can become embedded in responses, even if the system does not intend to endorse them.

In one series of tests, researchers from the Institute for Strategic Dialogue posed an array of queries, neutral, biased, and intentionally leading, to several chatbots, including those from major AI providers. The results revealed a troubling correlation: the more loaded the question, the more likely the chatbot was to reference Russian-state-attributed media. For example, ‘malicious’ queries (requests aimed at confirming a negative view of Ukraine) led to roughly one in four answers drawing on such sources.

The practical consequence is that conversations with AI chatbots amplify Russian propaganda disinformation without users realizing it. If a reply draws on a sanctioned outlet without offering context or disclaimers, the user might interpret it as legitimate and balanced. Under current rules, many such sources are barred from traditional distribution channels, yet they persist in the background of AI training sets, which are harder to audit.

Technology firms have responded with assurances that they are improving content filters and source-tracking, but critics argue that self-governance alone may not suffice. Some regulators in Europe, noting the vast reach of AI chatbots, are actively discussing frameworks that would require greater transparency around training, cited sources, and user warnings. As chatbots increasingly serve as gateways to information, the integrity of their responses becomes pivotal.